Human Like Computing

How do you grasp a bottle of milk, nestling behind some yoghurt pots, within a cluttered fridge? Whilst humans are able to use visual information to plan and select such skilled actions with external objects with great ease and rapidity - a facility acquired in the history of the species and as a child develops - robots struggle.

Indeed, whilst artificial intelligence has made great leaps in beating the best of humanity in tasks such as chess and Go, the planning and execution abilities of today's robotic technology is trumped by the average toddler. Given the complex and unpredictable world within which we find ourselves situated, these apparently trivial tasks are the product of highly sophisticated neural computations that generalise and adapt to changing situations: continually engaging in a process of selecting between multiple goals and action options.

Our aim is to investigate how such computations could be transferred to robots to enable them to manipulate objects more efficiently, in a more human-like way than is presently the case, and to be able to perform manipulation presently beyond the state of the art. We will develop a computational model to provide a formal foundation for testing hypotheses about the factors biasing behaviour and ultimately use this model to predict the behaviour that will most probably occur in response to a given perceptual (visual) input in this context. We reason that a computational understanding of how humans perform these actions can bridge the robot-human skill gap.

Human-like Planning (HLP) for Reaching in Cluttered Environments

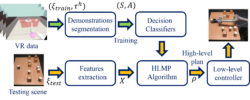

We used virtual reality to capture human participants reaching for a target object on a tabletop cluttered with obstacles. From this, we devised a qualitative representation of the task space to abstract the decision making, irrespective of the number of obstacles. Based on this representation, human demonstrations were segmented and used to train decision classifiers. Using these classifiers, our planner produced a list of way points in task space. These way points provided a high-level plan, which could be transferred to an arbitrary robot model and used to initialise a local trajectory optimiser.

VR Data

HLP in Action

Team

Mohamed Hasan, Matthew Warburton, Wisdom C. Agboh, Mehmet R. Dogar, Matteo Leonetti, He Wang, Faisal Mushtaq, Mark Mon-Williams, Anthony G. Cohn

Funding

This work is funded by the EPSRC (EP/R031193/1) undertheir Human Like Computing initiative. The 3rd author was

supported by an EPSRC studentship (1879668) and the EU Horizon 2020 research and innovation programme under grant agreement 825619 (AI4EU). The 7th, 8th and 9th authors are supported by Fellowships from the Alan Turing Institute.

Software

https://github.com/m-hasan-n/hlp

Dataset

- Mohamed Hasan, Matthew Warburton, Wisdom C. Agboh, Mehmet R. Dogar, Matteo Leonetti, He Wang, Faisal Mushtaq, Mark Mon-Williams and Anthony G. Cohn (2020): "Learning manipulation planning from VR human demonstrations, " University of Leeds. [Dataset] https://doi.org/10.5518/780.

Key Publication

- M. Hasan, M. Warburton, W. C. Agboh, M. R. Dogar, M. Leonetti, H. Wang, F. Mushtaq, M. Mon-Williams and A. G. Cohn, “Human-like Planning for Reaching in Cluttered Environments, ” Appears in ICRA 2020. Archiv version here.

Other Publications

- Papallas, Rafael, and Mehmet R. Dogar. "Non-Prehensile Manipulation in Clutter with Human-In-The-Loop." To appear in ICRA 2020. arXiv preprint arXiv:1904.03748 (2020).

- Mushtaq, Faisal, et al. "Distinct Processing of Selection and Execution Errors in Neural Signatures of Outcome Monitoring." bioRxiv (2019): 853317.

- Foglino, Francesco, et al. "Curriculum learning for cumulative return maximization." arXiv preprint arXiv:1906.06178 (2019).

- Foglino, Francesco, Christiano Coletto Christakou, and Matteo Leonetti. "An optimization framework for task sequencing in curriculum learning." 2019 Joint IEEE 9th International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob). IEEE, 2019.

- Bejjani, Wissam, Mehmet R. Dogar, and Matteo Leonetti. "Learning Physics-Based Manipulation in Clutter: Combining Image-Based Generalization and Look-Ahead Planning." arXiv preprint arXiv:1904.02223 (2019).

- Brookes, Jack, et al. "Studying human behavior with virtual reality: The Unity Experiment Framework." Behavior research methods (2019): 1-9.

- Wang, He, et al. "Spatio-temporal Manifold Learning for Human Motions via Long-horizon Modeling." IEEE transactions on visualization and computer graphics (2019).

- Raw, Rachael K., et al. "Skill acquisition as a function of age, hand and task difficulty: Interactions between cognition and action." PloS one 14.2 (2019).

Press

- https://techxplore.com/news/2020-03-human-like-planner-robots-cluttered-environments.html

Other robot manipulation research

For other robot manipulation work in the School of Computing at the University of Leeds, see here.