Robotic Manipulation Planning for Human-Robot Collaboration

This project was funded by the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No. 746143. The Fellow is Mehmet Dogar, and the project was conducted at the University of Leeds. The project duration was the two years between 1/5/2017-30/4/2019. Below, we summarize the results and publications from this project.

This project was funded by the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No. 746143. The Fellow is Mehmet Dogar, and the project was conducted at the University of Leeds. The project duration was the two years between 1/5/2017-30/4/2019. Below, we summarize the results and publications from this project.

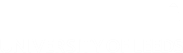

This project focused on robotic manipulation planning for human-robot interaction during forceful collaboration. Take the example in the figure below where a human is drilling four holes onto a board and then cutting a piece of it. As the human applies the operations, the robot grasps the board for the human, occasionally changing its grasp on the object, always trying to maximize the stability and smoothness of the interaction.

The project addressed various technical challenges associated with this problem. First, it addressed the algorithmic challenge of planning a sequence of grasps for multiple manipulators (e.g. the bimanual robot in the figure above). Second, it addressed the problem of modelling of the human forces applied during the interaction. Third, it addressed the problem of human body posture during the interaction. Finally, it addressed the problem of integrating these algorithms on a real robot system.

A key task in this project was the development of a planning algorithm that can take in a description of a forceful task and output a robot plan, in terms of grasps on the object and robot motion trajectories to connect these grasps to each other. The core algorithm has been published at 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). You can find the original paper here and an open-access version here. A follow-up extension to the planner has been submitted to 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS).

In the video below, you can see the planner in action. Imagine grasping a wooden board while your friend drills holes into it and cuts pieces off of it. You would predict the forces your friend will apply on the board and choose your grasps accordingly; for example you would rest your palm firmly against the board to hold it stable against the large drilling forces. The manipulation planner enables a robot to grasp objects similarly:

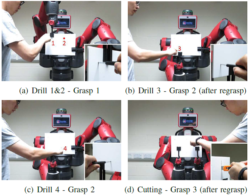

The algorithm is a hierarchical algorithm, committing to different constraints at different layers of planning. These layers are illustrated in the figure below. At the top layer, the planner chooses grasps for the different manipulators (arms) of the robot on the object. The sequence of grasps is chosen such that they will resist the external forces in a stable manner, but also such that the number of different grasps is minimized, for a smooth interaction. In the second layer, the switch from one grasp to the next is planned, in terms of releasing certain grippers, and adding new ones. In the third layer, object configurations are identified that keep the object stable under gravity, even if certain grippers are released. Finally, in the fourth layer, constrained motion planning is performed to connect these configurations together.

Later, we extended this planner to work not only using the grippers of the robot manipulators, but also using environmental contacts, e.g. a shared table surface between the robot and the human. The algorithm treated such surfaces as contacts that can be exploited to make the object stable against applied forces by the human. This extended algorithm has been submitted to the IEEE/RSJ IROS 2019 conference. A video from this planner is presented below:

We developed a way to measure the amount of space between the human and the robot during the collaboration, and we provided an optimization framework to maximize this distance while still holding the object at a pose appropriate for the collaboration. We compared the human safety and comfort perception using human subject experiments. These metrics and the results were submitted, accepted, and presented at the IEEE-RAS Humanoids 2018 conference. You can find the original paper here, and an open-access version here.